信息版本

| 名称 |

信息 |

| 系统 |

CentOS Linux release 7.3.1611 (Core) |

| Java |

java version “1.8.0_101” |

| Elasticsearch |

Elasticsearch 5.5.2 |

| Logstash |

logstash-5.5.2 |

| Kibana |

Kibana 5.5.2 |

| Redis |

redis-4.0.1 |

相关参考连接

下载地址:ELK

ELK中文文档:ELK doc

| 主机名称 |

IP |

应用内容 |

| elk01 |

10.20.161.155 |

logstash,elasticsearch,redis,kibana,nginx,VIP 10.20.161.200 |

| elk02 |

10.20.161.161 |

logstash,elasticsearch,redis,kibana,nginx VIP 10.20.161.200 |

ELK Cluster 场景

- 很多企业会用nginx作反向代理顶在最前端,并通过Keepalived做高可用

- 使用ELK对nginx+keepalived架构进行日志收集和分析,并且这次采用json方式收集

- 文档中将包括redis冗余,ELK集群

- 所有角色都部署在test02和test03上,熟悉文档的可以自行将里面的角色分布在不同服务器或者docker

Nginx + Keepalived忽略

可以通过google和百度一大堆,这里已包含很多内容不再陈述

Redis + Keepalived冗余

elk01 and elk02

系统优化

1

2

3

| echo "vm.overcommit_memory = 1" >> /etc/sysctl.conf

echo never > /sys/kernel/mm/transparent_hugepage/enabled

sysctl -p

|

Redis安装

1

2

3

4

5

6

7

| tar xf redis-4.0.1.tar.gz

mkdir -p /home/app

mkdir -p /data/logs/redis

mkdir -p /data/redis

mv redis-4.0.1 /home/app/

cd /home/app/redis-4.0.1/

make && make install

|

- elk01 redis配置如下

vim /home/app/redis-4.0.1/redis.conf

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

| requirepass elk123

masterauth elk123

protected-mode yes

port 6379

tcp-backlog 260000

timeout 0

tcp-keepalive 300

daemonize yes

supervised no

pidfile /var/run/redis_6379.pid

loglevel notice

logfile "/data/logs/redis/redis.log"

databases 16

save 900 1

save 300 10

save 60 10000

stop-writes-on-bgsave-error yes

rdbcompression yes

rdbchecksum yes

dbfilename dump.rdb

dir /data/redis

slave-serve-stale-data yes

slave-read-only yes

repl-diskless-sync no

repl-diskless-sync-delay 5

repl-disable-tcp-nodelay no

slave-priority 100

appendonly no

appendfilename "appendonly.aof"

appendfsync everysec

no-appendfsync-on-rewrite no

auto-aof-rewrite-percentage 100

auto-aof-rewrite-min-size 64mb

aof-load-truncated yes

lua-time-limit 5000

slowlog-log-slower-than 10000

slowlog-max-len 128

latency-monitor-threshold 0

notify-keyspace-events ""

hash-max-ziplist-entries 512

hash-max-ziplist-value 64

list-max-ziplist-size -2

list-compress-depth 0

set-max-intset-entries 512

zset-max-ziplist-entries 128

zset-max-ziplist-value 64

hll-sparse-max-bytes 3000

activerehashing yes

client-output-buffer-limit normal 0 0 0

client-output-buffer-limit slave 256mb 64mb 60

client-output-buffer-limit pubsub 32mb 8mb 60

hz 10

aof-rewrite-incremental-fsync yes

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

| requirepass elk123

masterauth elk123

protected-mode yes

port 6379

tcp-backlog 260000

timeout 0

tcp-keepalive 300

daemonize yes

supervised no

pidfile /var/run/redis_6379.pid

loglevel notice

logfile "/data/logs/redis/redis.log"

databases 16

save 900 1

save 300 10

save 60 10000

stop-writes-on-bgsave-error yes

rdbcompression yes

rdbchecksum yes

dbfilename dump.rdb

dir /data/redis

slave-serve-stale-data yes

slave-read-only yes

repl-diskless-sync no

repl-diskless-sync-delay 5

repl-disable-tcp-nodelay no

slave-priority 100

appendonly no

appendfilename "appendonly.aof"

appendfsync everysec

no-appendfsync-on-rewrite no

auto-aof-rewrite-percentage 100

auto-aof-rewrite-min-size 64mb

aof-load-truncated yes

lua-time-limit 5000

slowlog-log-slower-than 10000

slowlog-max-len 128

latency-monitor-threshold 0

notify-keyspace-events ""

hash-max-ziplist-entries 512

hash-max-ziplist-value 64

list-max-ziplist-size -2

list-compress-depth 0

set-max-intset-entries 512

zset-max-ziplist-entries 128

zset-max-ziplist-value 64

hll-sparse-max-bytes 3000

activerehashing yes

client-output-buffer-limit normal 0 0 0

client-output-buffer-limit slave 256mb 64mb 60

client-output-buffer-limit pubsub 32mb 8mb 60

hz 10

aof-rewrite-incremental-fsync yes

slaveof 10.20.161.155 6379

|

设置启动脚本

cat /etc/init.d/redis-server

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

| #!/usr/bin/env bash

#

# redis start up the redis server daemon

#

# chkconfig: 345 99 99

# description: redis service in /etc/init.d/redis \

# chkconfig --add redis or chkconfig --list redis \

# service redis start or service redis stop

# processname: redis-server

# config: /etc/redis.conf

PATH=/usr/local/bin:/sbin:/usr/bin:/bin

PASSWD='elk123'

REDISPORT=6379

EXEC=/usr/local/bin/redis-server

REDIS_CLI=/usr/local/bin/redis-cli

PIDFILE=/var/run/redis_6379.pid

CONF="/home/app/redis-4.0.1/redis.conf"

#make sure some dir exist

#if [ ! -d /var/lib/redis ] ;then

# mkdir -p /var/lib/redis

# mkdir -p /var/log/redis

#fi

case "$1" in

status)

ps -A|grep redis

;;

start)

if [ -f $PIDFILE ]

then

echo "$PIDFILE exists, process is already running or crashed"

else

echo "Starting Redis server..."

$EXEC $CONF

fi

if [ "$?"="0" ]

then

echo "Redis is running..."

fi

;;

stop)

if [ ! -f $PIDFILE ]

then

echo "$PIDFILE does not exist, process is not running"

else

PID=$(cat $PIDFILE)

echo "Stopping ..."

$REDIS_CLI -p $REDISPORT -a $PASSWD SHUTDOWN

while [ -x ${PIDFILE} ]

do

echo "Waiting for Redis to shutdown ..."

sleep 1

done

echo "Redis stopped"

fi

;;

restart|force-reload)

${0} stop

${0} start

;;

*)

echo "Usage: /etc/init.d/redis {start|stop|restart|force-reload}" >&2

exit 1

esac

|

elk01和elk02启动redis

/etc/init.d/redis-server start

检查是否主从

1

2

3

4

5

6

| elk01输入

redis-cli -a elk123

set leon aaa

elk02输入

redis-cli -a elk123

get leon

|

如果输出是aaa说明主从成功

Keepalived安装

elk01 和 elk02

yum install keepalived

elk01

vim /etc/keepalived/keepalived.conf

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

| vrrp_instance elk_test_ha {

state MASTER ###设置为MASTER

interface eth0 ###监控网卡

virtual_router_id 80

priority 100 ###权重值

nopreempt

authentication {

auth_type PASS ###加密

auth_pass 201709 ###密码

}

virtual_ipaddress {

10.20.161.200 ###VIP

}

notify_master /root/scripts/keepalived/redis_master.sh

notify_backup /root/scripts/keepalived/redis_backup.sh

notify_fault /root/scripts/keepalived/redis_fault.sh

notify_stop /root/scripts/keepalived/redis_stop.sh

}

|

elk02

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

| vrrp_instance elk_test_ha {

state BACKUP ###设置为BACKUP

interface eth0 ###监控网卡

virtual_router_id 80

priority 10 ###权重值

authentication {

auth_type PASS ###加密

auth_pass 201709 ###密码

}

virtual_ipaddress {

10.20.161.200 ###VIP

}

notify_master /root/scripts/keepalived/redis_master.sh

notify_backup /root/scripts/keepalived/redis_backup.sh

notify_fault /root/scripts/keepalived/redis_fault.sh

notify_stop /root/scripts/keepalived/redis_stop.sh

}

|

Redis冗余测试

elk01

1

2

3

4

| redis-cli -a elk123 "ROLE" #查看主从状态

systemctl stop keepalived.service #关闭keepalived,测试切换

ip addr #查看VIP是否漂移

redis-cli -a elk123 "ROLE" #查看是否变成slave

|

elk02

1

2

3

| redis-cli -a elk123 "ROLE" #查看主从状态

redis-cli -a elk123 #尝试输入数据

set elk test

|

elk01

1

2

3

4

5

| redis-cli -a elk123

get elk

如果显示如下情况说明切换成功

127.0.0.1:6379> get elk

"test"

|

systemctl enable keepalived.service

这边就不提供keepalived里面的脚本,无非就是命令检测,命令切换主从,配置不变更,所以keepalived所在的主配置上还是会主动抢VIP

ELK install

Elasticsearch 安装

- ElasticSearch默认的对外服务的HTTP端口是9200,节点间交互的TCP端口是9300,注意打开tcp端口。

- Elasticsearch不允许以root运行(其实也可以运行,需要配置)

yum install java-1.8.0-openjdk -y

rpm -ivh elasticsearch-5.5.2.rpm

vim /etc/elasticsearch/elasticsearch.yml

elk01

1

2

3

4

5

6

7

8

9

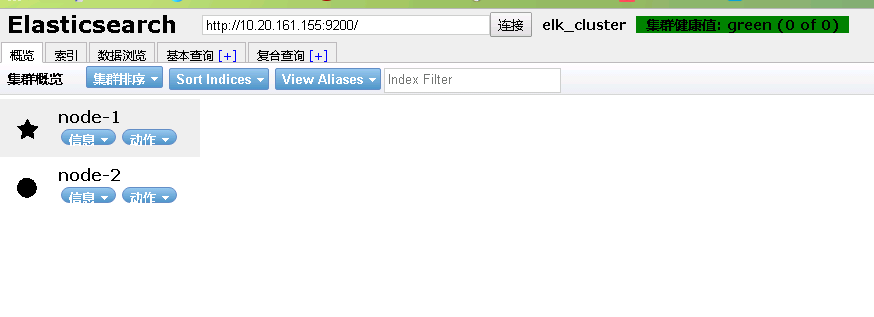

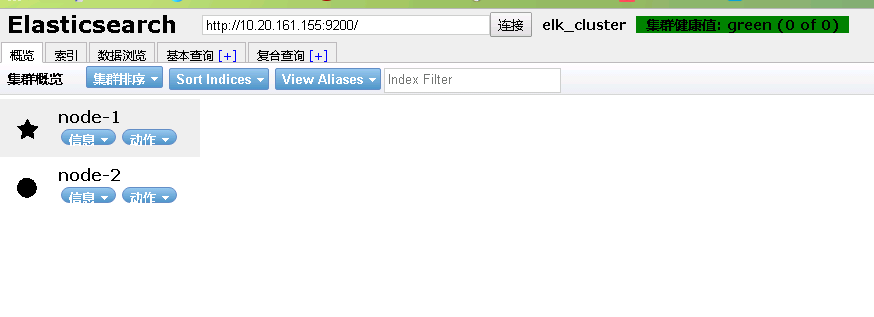

| cluster.name: elk_cluster

node.name: node-1

node.attr.rack: r1

path.data: /data/elasticsearch/

network.host: 0.0.0.0

http.port: 9200

discovery.zen.ping.unicast.hosts: ["10.20.161.155", "10.20.161.161"]

http.cors.enabled: true #允许跨域访问

http.cors.allow-origin: "*"

|

elk02

1

2

3

4

5

6

7

8

9

| cluster.name: elk_cluster

node.name: node-2

node.attr.rack: r1

path.data: /data/elasticsearch/

network.host: 0.0.0.0

http.port: 9200

discovery.zen.ping.unicast.hosts: ["10.20.161.155", "10.20.161.161"]

http.cors.enabled: true #允许跨域访问

http.cors.allow-origin: "*"

|

1

2

| mkdir /data/elasticsearch

chown elasticsearch.elasticsearch -R /data/elasticsearch/

|

1

2

3

| systemctl start elasticsearch.service

systemctl enable elasticsearch.service

systemctl status elasticsearch.service

|

- 测试是否成功(如下是成功的)

curl -X GET http://10.20.161.155:9200

curl -X GET http://10.20.161.161:9200

1

2

3

4

5

6

7

8

9

10

11

12

13

| {

"name" : "node-1",

"cluster_name" : "elk_cluster",

"cluster_uuid" : "22PqfDC3SnqYdyuo6zvl1w",

"version" : {

"number" : "5.5.2",

"build_hash" : "b2f0c09",

"build_date" : "2017-08-14T12:33:14.154Z",

"build_snapshot" : false,

"lucene_version" : "6.6.0"

},

"tagline" : "You Know, for Search"

}

|

1

2

3

4

5

6

7

8

| cat << EOF > /etc/yum.repos.d/pkg.repo

[pkgs]

name=Extra Packages for Enterprise Linux 7 - $basearch

baseurl=http://springdale.math.ias.edu/data/puias/unsupported/7/x86_64/

enabled=1

gpgcheck=0

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-EPEL-7

EOF

|

yum install -y nodejs nodejs-npm http-parser

cd /etc/elasticsearch/

1

2

3

4

5

| git clone git://github.com/mobz/elasticsearch-head.git

mv /etc/elasticsearch/elasticsearch-head/ /etc/elasticsearch/head/

chown root.elasticsearch /etc/elasticsearch/ -R

npm install

npm run start

|

访问地址

http://10.20.161.155:9100/

http://10.20.161.161:9100/

如图

Nginx 安装

- Nginx安装略

- log配置如下

vim /home/app/nginx/nginx.conf

1

2

3

4

5

6

7

8

9

10

11

12

| log_format json '{"@timestamp":"$time_iso8601",' #请求时间

'"request_time":"$request_time",' #请求总响应时间

'"upstream_time":"$upstream_response_time",' #后端响应时间

'"client_ip":"$remote_addr",' #客户端IP(remote)

'"xff_ip":"$http_x_forwarded_for",' #客户端IP(xff)

'"upstream_ip":"$upstream_addr",' #后端server ip

'"http_host":"$host",' #网站域名(或者IP)

'"request":"$request",' #请求内容

'"status":"$status",' #请状态(200,500,等)

'"size":"$body_bytes_sent",' #请求内容的容量大小(不包括响应头的大小,字节单位)

'"referer":"$http_referer",' #跳转请求(从哪个请求跳转过来)

'"agent":"$http_user_agent"}'; #客户端浏览器信息

|

Logstash安装server和agent

rpm -ivh logstash-5.5.2.rpm

* server端

vim /etc/logstash/conf.d/server_nginx.conf

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

| input {

redis {

host => "10.20.161.200"

port => "6379"

data_type => "list"

key => "logstash:redis"

type => "redis-input"

password => "elk123"

}

}

filter {

json{

source =>"message"

}

}

output{

elasticsearch {

hosts => ["10.20.161.200:9200"]

index => "nginx-access-log-%{+YYYY.MM.dd}"

}

}

|

- agent端

vim /etc/logstash/conf.d/agent_nginx.conf

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

| input {

file {

type => "nginx-access-log"

path => ["/data/logs/nginx/access.log"]

codec => "json"

}

}

output {

redis {

host => "10.20.161.200:6379" #redis server

data_type => "list"

key => "logstash:redis"

password => "elk123"

}

}

|

启动命令

systemctl start logstash.service

systemctl enable logstash.service

Kibana安装

rpm -ivh kibana-5.5.2-x86_64.rpm

vim /etc/kibana/kibana.yml

1

2

| server.host: "0.0.0.0"

elasticsearch.url: "http://10.20.161.200:9200"

|

systemctl enable kibana.service

systemctl start kibana.service

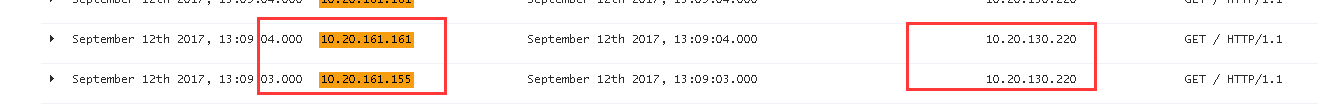

访问:http://10.20.161.200:5601

说明完成

说明完成

说明

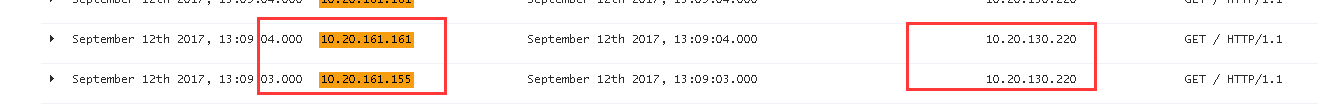

- 由于测试原因Logstash的服务端和客户端放在了同一台服务器上,根据实际情况可以将客户端放到你需要获取日志的服务器上

- 此效果解决的ELK冗余问题,Elasticsearch集群,Logstash 2个服务端都会获取主Redis数据并写入主节点Elasticsearch

- 当服务器155宕机,则VIP会漂移至161并切换从redis为主,而Logstash客户端随着VIP的漂移将数据传输给了刚切换为主的redis,161上的Logstash服务端还继续工作将数据从redis取出存入Elasticsearch,而Elasticsearch本身就是集群所以,161上的节点可以继续存数据。

问题集合

ERROR StatusLogger No log4j2 configuration file found. Using default configuration: logging only errors to the console

可以忽略

说明完成

说明完成